Hi, I'm Ben Hoover

I'm an AI Researcher studying memory

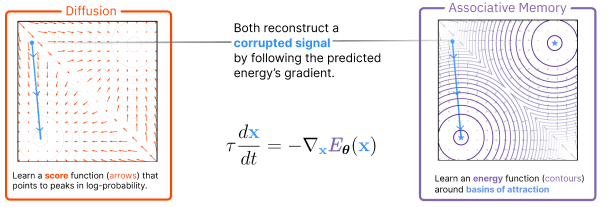

Understanding AI foundation models from the perspective of large Associative Memories.

I am a Machine Learning PhD student at Georgia Tech advised by Polo Chau and an AI Research Engineer with IBM Research. My research focuses on building more interpretable and parameter efficient AI by rethinking the way we train and build deep models, taking inspiration from Associative Memories and Hopfield Networks. I like to visualize what happens inside AI models.

News

Apr 2024

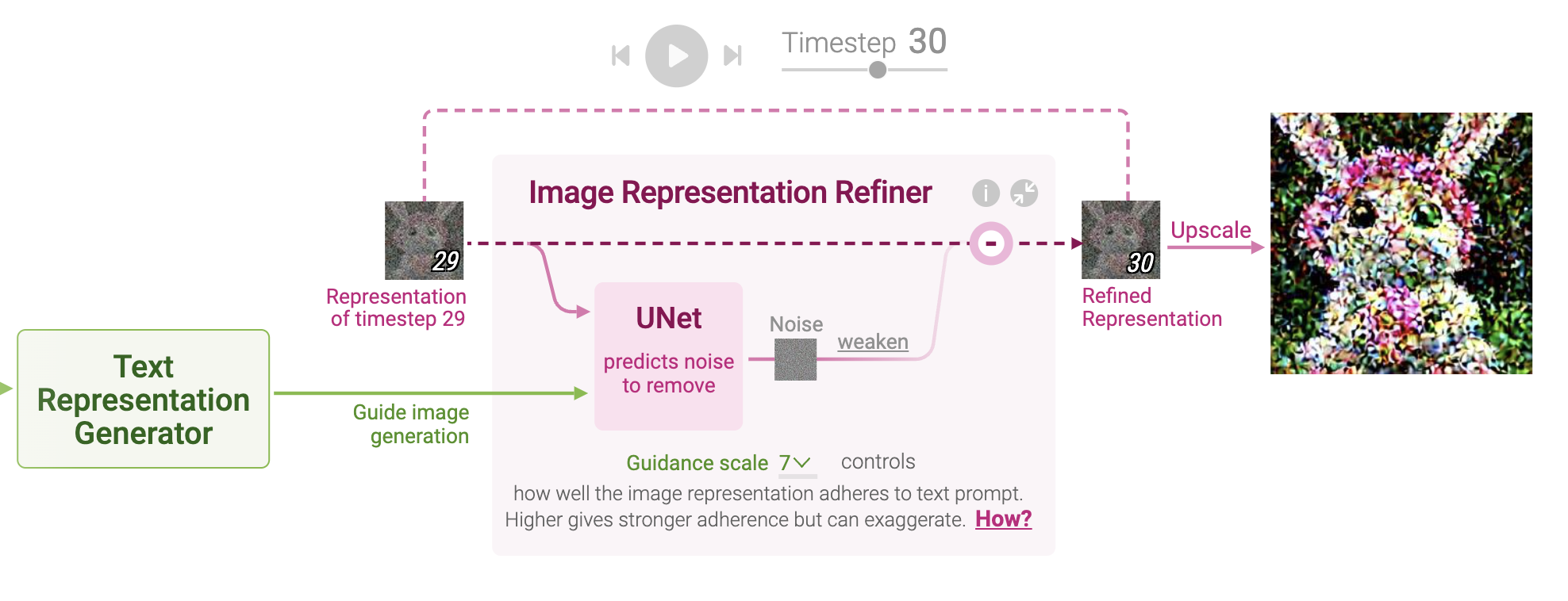

🎉 Diffusion Explainer accepted to the Demo Track at IJCAI 2024!

Oct 2023

Sep 2023

📜 Memory in Plain Sight released on ArXiv!

Sep 2023

🎉 Energy Transformer accepted to NeurIPS'23!

Sep 2023

🧑🏫 Gave a talk about Energy Transformer to the McMahon Lab.

Sep 2023

🎉 ConceptEvo accepted to CIKM'23!

Aug 2023

🎉 Diffusion Explainer accepted to VIS'23

Aug 2023

🚀 Released an Associative Memory demo that runs in your browser.

Aug 2023

🧑🏫 Selected as a panelist for the AMHN Workshop at NeurIPS'23.

Jun 2023

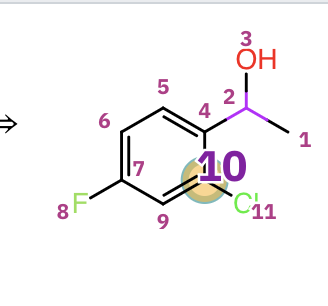

🚀 Released Molformer: a UI to explore AI-generated organic small molecules. See the blog at IBM Research and paper at Science Advances!

May 2023

🐣 Became a dad 🤗👶

Jan 2023

📣 GATech highlighted my research in their College of Computing News

Memory Research Highlights

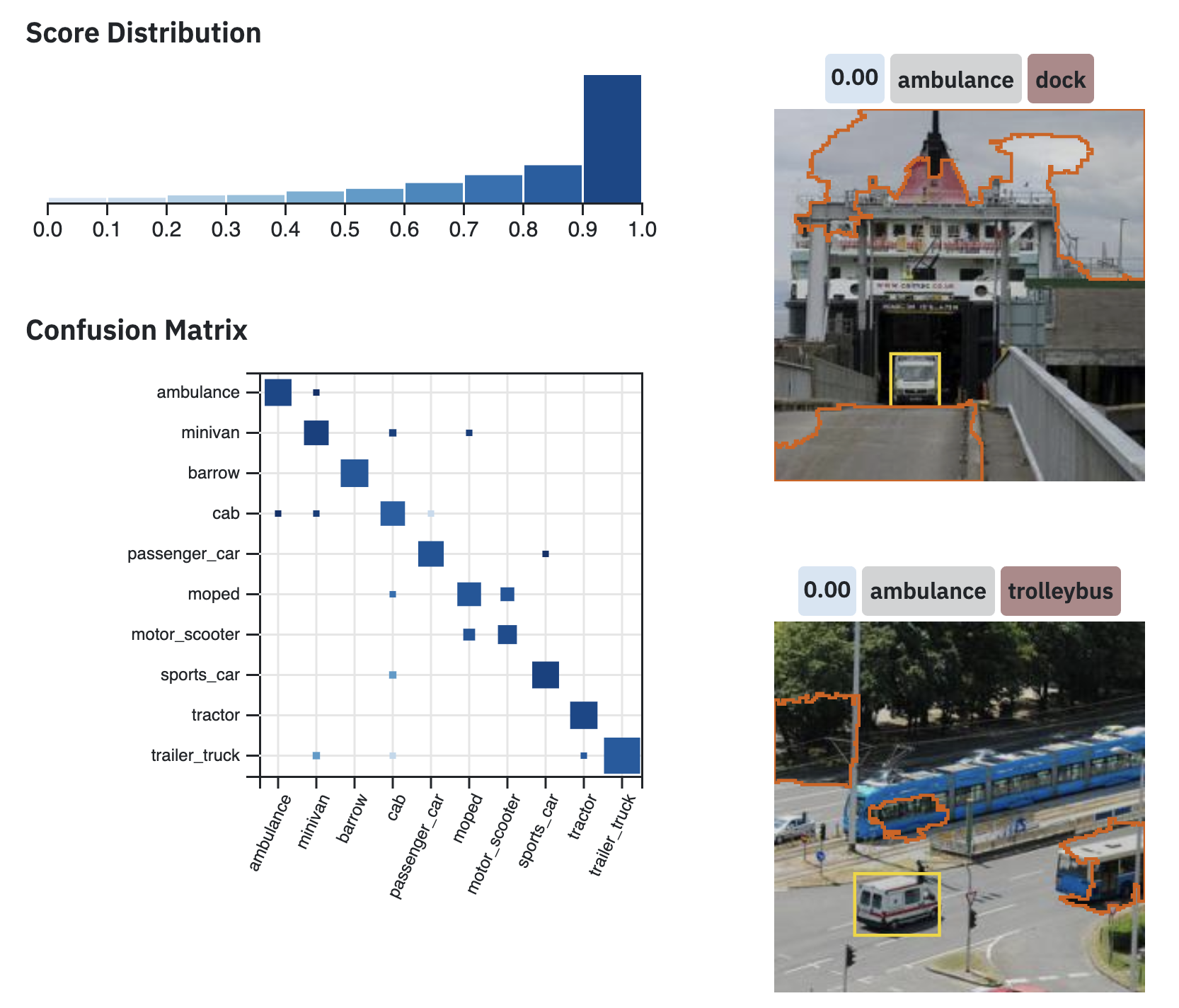

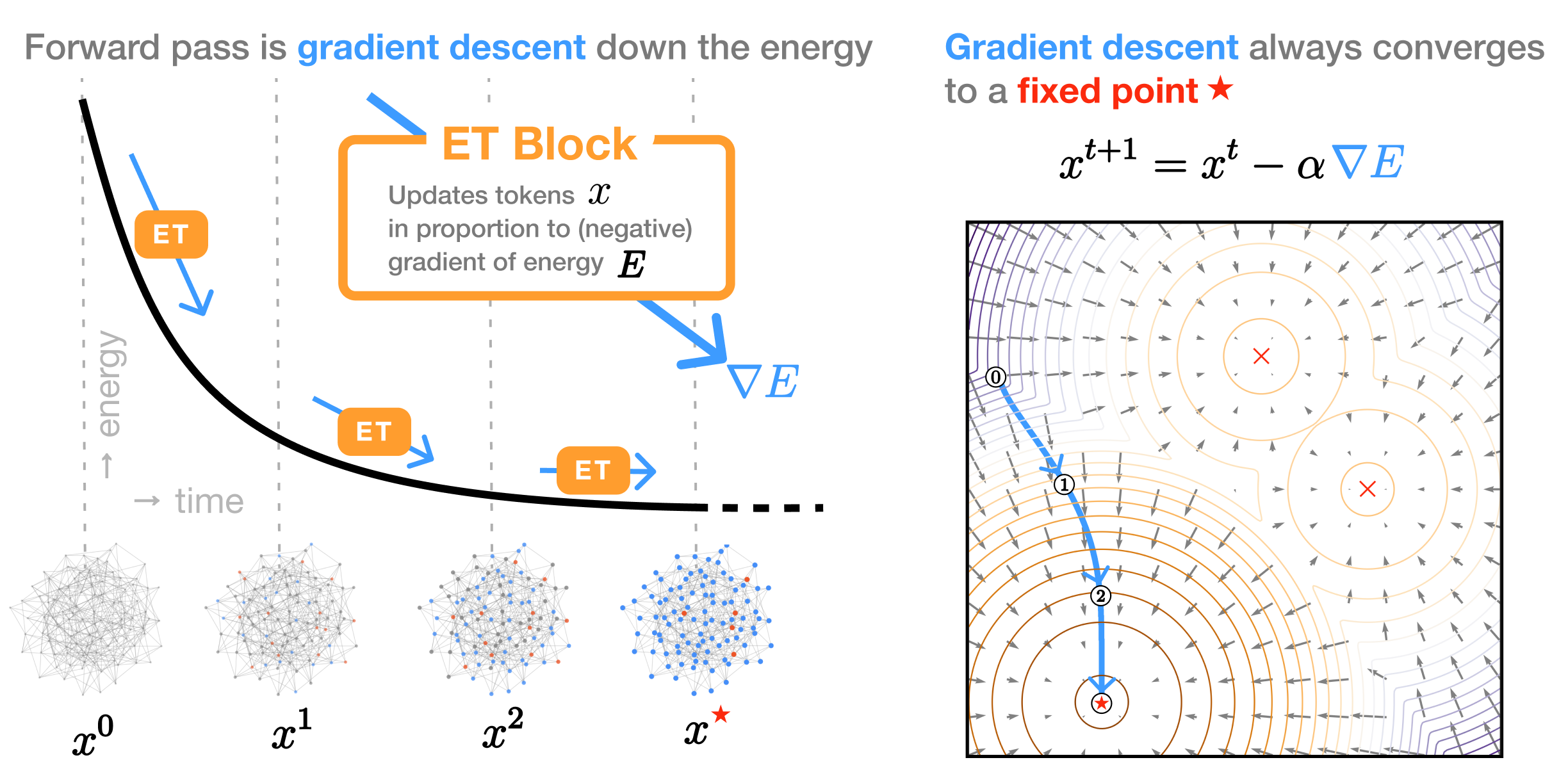

Energy Transformer

We derive an Associative Memory inspired by the famous Transformer architecture, where the forward pass through the model is memory retrieval by energy descent.