Hi, I'm Ben Hoover

I'm an AI Researcher studying memory

Understanding AI foundation models from the perspective of large Associative Memories.

I am a Machine Learning PhD candidate at Georgia Tech advised by Polo Chau and an AI Research Engineer with IBM Research. My research focuses on building more interpretable and parameter efficient AI by rethinking the way we train and build deep models, taking inspiration from Associative Memories and Hopfield Networks. I like to visualize what happens inside AI models.

News

Dec 2025

Our tutorial "Modern Methods in Associative Memory" is accepted to ICASSP 2026. See you in Barcelona! 🥳

Nov 2025

Our next iteration of the "New Frontiers in Associative Memory" workshop is accepted to ICLR 2026. See you in Rio! 🥳

Nov 2025

🏅 I received a Top Reviewer award at NeurIPS 2025 (top 8% of 24k+ reviewers).

Sep 2025

🎉 Dense Associative Memory with Epanechnikov energy accepted as 🏅Spotlight Poster (top 3% of 21k+ submissions) to NeurIPS'25 main conference. See you in San Diego ✈️!

Sep 2025

📢 Our Associative Memory Tutorial is accepted at AAAI 2026 in Singapore. See you there!

Aug 2025

🎉 Dense Associative Memory with Epanechnikov energy accepted as an 🥇oral poster to the MemVis Workshop @ICCV'25. See you in Hawaii 🌺!

See more...

Research Highlights

Epanechnikov DenseAM

Dense Associative Memories can produce emergent memories when we replace the Gaussian kernel with a KDE-optimal Epanechnikov kernel.

Tutorial on Associative Memory

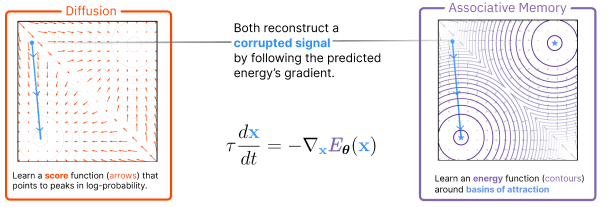

Energy-based Associative Memory transformed the field of AI, but it is hardly understood. We present a birds eye view of Associative Memory, beginning with the invention of the Hopfield Network and concluding with modern, dense storage versions that have strong connections to Transformers and kernel methods.

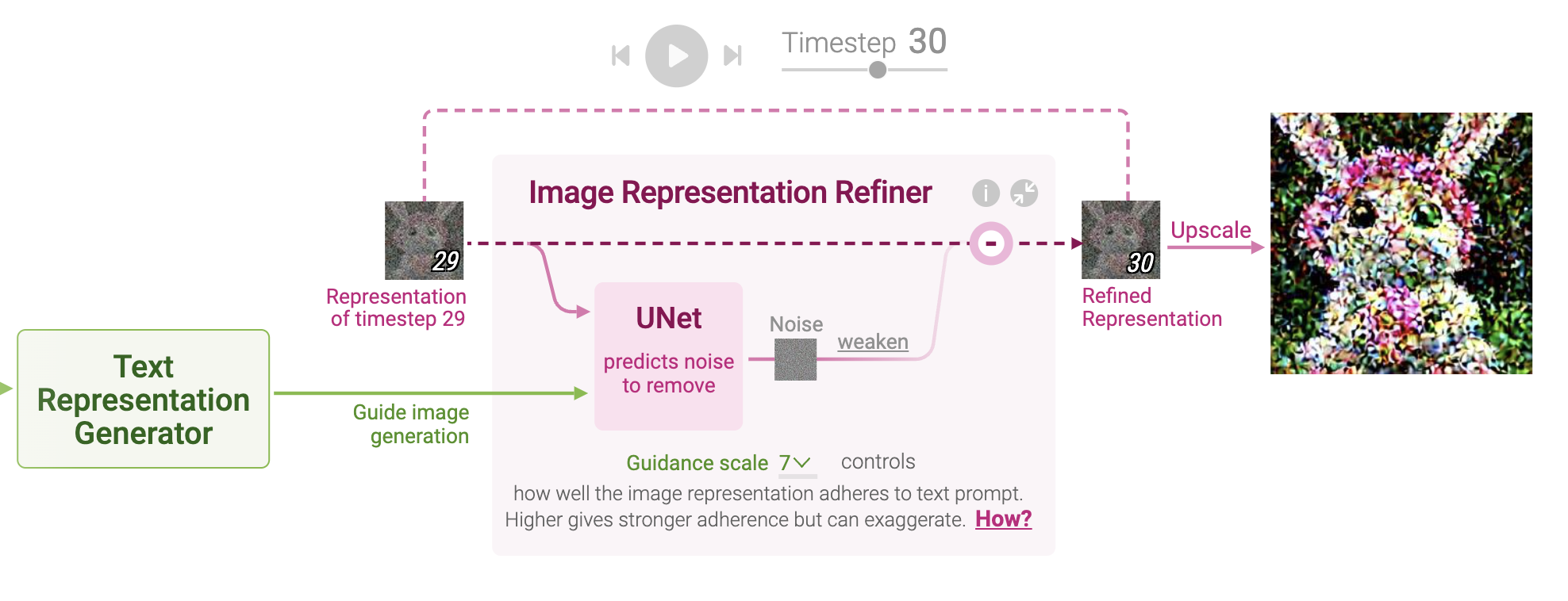

ConceptAttention

We discover how to extract highly salient features from the learned representations of Diffusion Transformers, using our technique to segment images by semantic concept. We name our technique ConceptAttention, which outperforms all prior methods on single- and multi-class classification.

DenseAMs meet Random Features

DenseAMs can store an exponential number of memories, but each memory adds new parameters to the energy function. We propose a novel, "Distributed representation for DenseAMs" (DrDAM) that allows us to add new memories without increasing the total number of parameters.

Transformer Explainer

Transformers are the most powerful AI innovation of the last decade. Learn how they work by interacting with every mechanic from the comfort of your web browser. Taught in Georgia Tech CSE6242 Data and Visual Analytics (typically 250-300 students per semester).

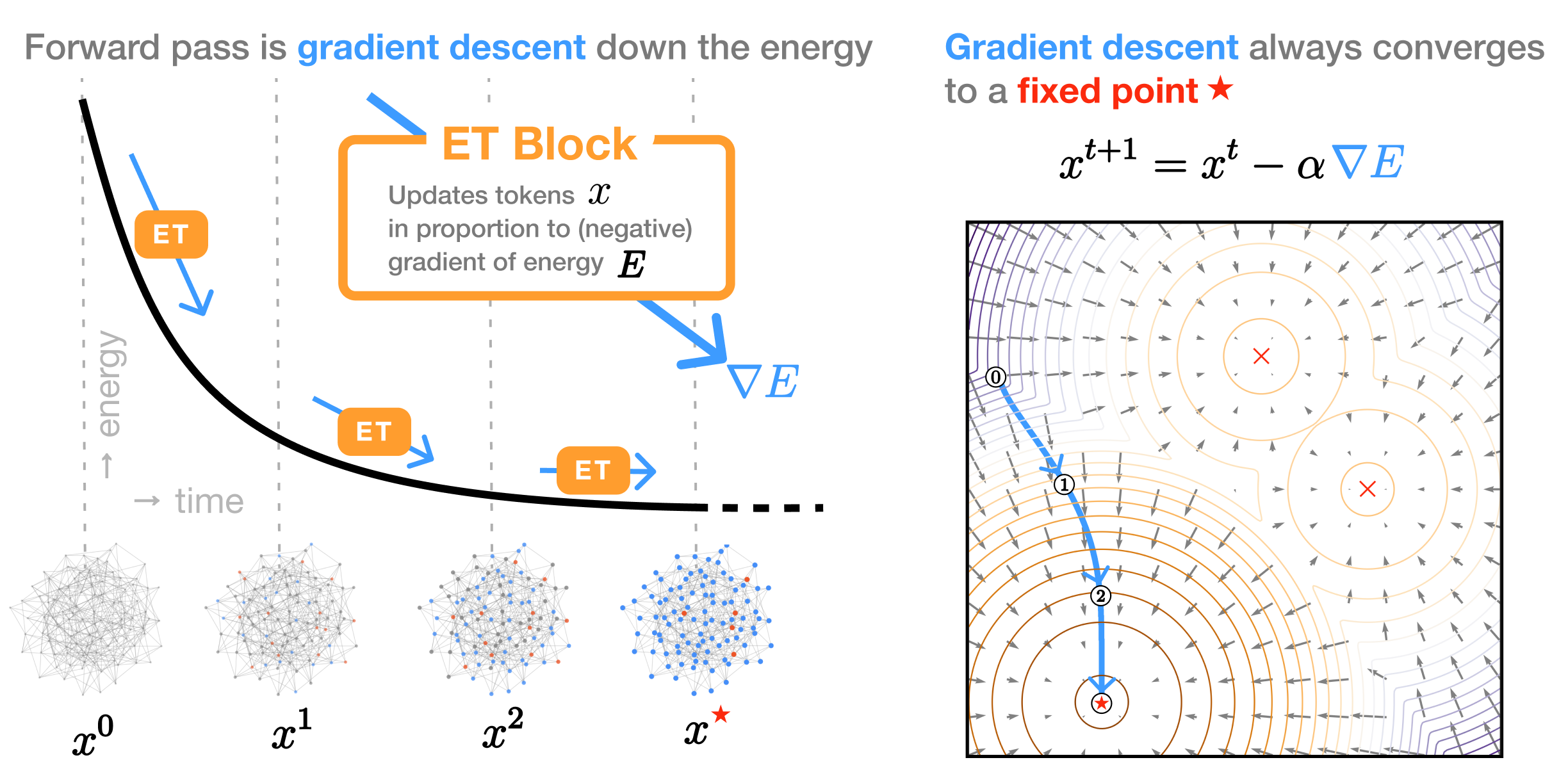

Energy Transformer

We derive an Associative Memory inspired by the famous Transformer architecture, where the forward pass through the model is memory retrieval by energy descent.